The robots are at it again. Marauding across Adland, terminating creativity wherever they find it and generally stinking up the place.

Every week, more and more brands are diving headfirst into the AI-generated content pool – some with barely any human involved at all. And there’s no sign of this trend slowing down. According to a recent IAB poll, nearly 9 in 10 advertisers plan to use generative AI tools to create video content by 2026.

The latest AI ad encroachment is splashed all over the glossy pages of Vogue. An ad for Guess features a blonde model wearing the brand’s summer collection. Glamorous, stylish – and entirely fake. That’s right, as revealed in the small print, the model was generated by AI. The newest face on the fashion scene doesn’t really exist.

Guess’s AI model

The ad has predictably sparked outrage across both the advertising and fashion industries. But with Gen AI tools becoming crucial in meeting skyrocketing demand for new content – with 71% of marketers predicting a 5X growth in just two years (Adobe) – it begs serious questions about the future of ad effectiveness. How effective are these ads? How do they compare? And can people actually tell the difference?

Well, rather than waving a finger in the air, we decided to back it up with some actual numbers. So we put our AI-powered creative testing platform to work by analysing some recent AI-generated ads to see how they stack up against the rest of the industry. In a nutshell, our tech measures the effectiveness of ads at scale, predicting the emotional and brand impact of campaigns

But wait, I hear you say – an AI measuring the performance of another AI? What kind of dystopian loop is this? Well, do not fear. Our algorithms are trained on tens of millions of real human responses to ads over the years. So, while the tech might be doing most of the heavy lifting, the insights are very much people-powered.

Anyway, here are the results:

FAKE LIQUID DEATH AI AD

Last month, a commercial for Liquid Death grabbed a lot of attention online. Created using AI, it cost just $800 and took two weeks to produce. However, there was one issue – it was also completely fake. The brand had no involvement whatsoever. But it wasn’t the fact that it was a fake ad that made headlines – it was the fact that it was actually good. So good, in fact, that many people didn’t realise it was AI-generated at all.

Take a look for yourself:

But how did it perform when compared to the rest of the industry?

Here are the stats:

Emotional engagement

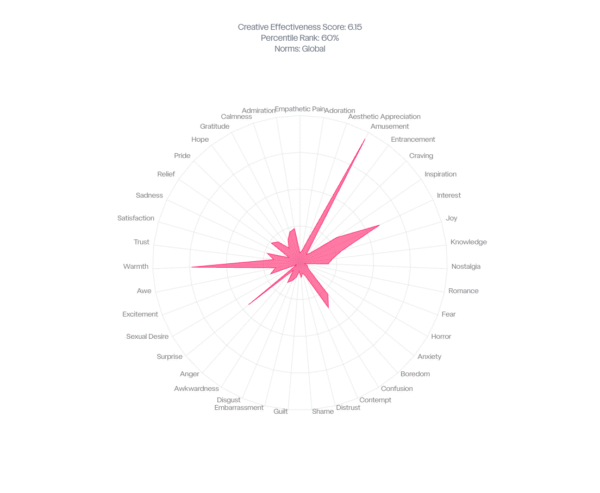

Well, as you can see from the chart below, the ad managed something that a lot of advertisers struggle with – it made people laugh.

As any stand-up comedian or best man will tell you, making people laugh is the hardest job in the world, so it’s pretty impressive that the ad is 57.4% more likely to make people chuckle than the average campaign and should not be sniffed at.

Unusually for an ad created by AI, it also generated strong feelings of warmth (+41.4% vs industry average). In fact, positive emotions overall were 3.2% above the norm.

DAIVID’s Creative Feed – Emotions generated by fake Liquid Death Ad

As with most ads that try to make us laugh, though, some of the jokes didn’t quite land and were deemed offensive. Negative emotions such as disgust, shame, awkwardness, guilt and embarrassment were all reported at above-average levels. However, the overall intensity of negative sentiment was only slightly above average and was largely outweighed by the strong positive response to the content.

One area of slight worry, however, was feelings of confusion, which were 38% above the norm. This could be down to the video’s fast-paced editing caused by AI’s technical limitations and the ad trying to cram as many jokes in as possible.

Attention

But did the ad keep people engaged? Well, mostly yes, in fact the ad is 2.4% more likely to keep people’s attention until the final three seconds than the industry norm.

Brand recall

Despite the ad being fake, people were also 20.3% more likely to correctly identify (or should that be incorrectly?) that Liquid Death was the brand behind the creative. This is largely down to the product playing a significant role in the ad’s narrative.

This helped to inspire people to act after watching, with viewers more likely to buy the product and recommend the brand after watching than the industry norm.

Overall effectiveness

So how did it perform overall? Well, pretty well. In fact, using our Creative Effectiveness Score – a composite metric score that measures overall effectiveness – it scored 6.15 out of 10. Now, that may not seem that high, but that’s higher than 60% of ads in our database. The industry average is 5.8. So, not bad. Not bad at all.

KALSHI: “WORLD GONE MAD”

When Kalshi’s bizarre, entirely AI-generated ad “World Gone Mad” was beamed out to millions of YouTube TV viewers during the NBA Finals in June, many described it as a watershed moment for the AI revolution. ‘AI had finally gone mainstream’, screamed many of the excited headlines.

Whether the ad for the prediction market – a platform that lets you “trade on anything, anywhere in the US” – will be remembered as an important chapter in the evolution of AI remains to be seen, but the ad is certainly memorable. A man riding an alligator in a kiddie pool; aliens pounding beers; people betting on hurricanes – it’s not your typical prime time fare.

You can take a look for yourselves here:

But how did it perform when we tested it? Let’s take a look.

Emotional engagement

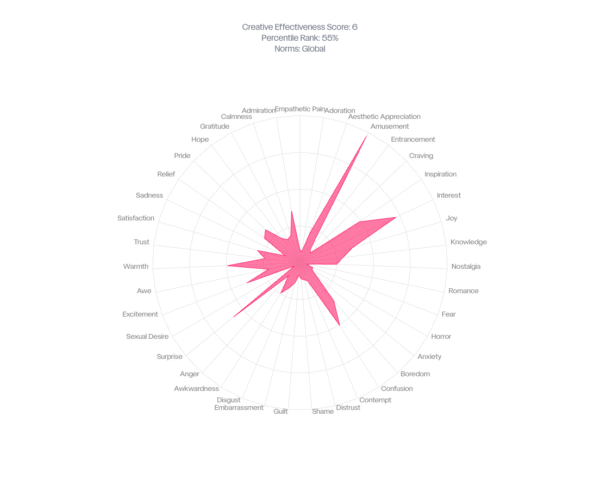

One of the most common criticisms of AI-generated ads is that they often feel too polished and clean. But that’s not a charge you could level at “World Gone Mad”.

The ad generated intense raw emotions throughout – although unfortunately mostly negative.

In fact, people were 18% more likely to feel intense negative emotions than the average ad, while of the 17 emotions that overindexed, 13 were negative.

DAIVID’s Creative Feed – Emotions generated by Kalshi’s “World Gone Mad”

Not exactly a formula for brand love. But at least it made people feel something.

But it wasn’t all negative. The ad was 27% more likely to make people laugh, while feelings of excitement (+9%), entrancement (+2.6%) and knowledge (+1.1%) were all above average.

Attention

With so much going on on screen, people were generally confused. In fact, they were 54% more likely to be left scratching their heads after watching the video than the average ad.

And that level of confusion ensured attention levels were below average throughout. People were also less likely to want to recommend the brand or buy the product.

Brand recall

However, one thing they were not confused about was which company was behind the ad. In fact, correct brand recall was 25.7% higher than the norm.

Overall effectiveness

You can say what you like about “World Gone Mad”, but for all its raw weirdness, the ad still managed to outperform more than half (54%) of the ads we’ve ever tested.

When measuring its overall effectiveness, it managed a CES of 6 out of 10 – well above average.

So how do AI-generated ads compare with industry norms overall?

But those are just two recent examples. So how do AI-generated ads perform overall?

To explore this, we tested a batch of 19 Gen AI ads to see how they compare with the rest of the ads in our database. While the level of human involvement varied across the different ad campaigns, all featured imagery that was entirely AI-generated. Let’s look at the results.

Emotional engagement

AI-generated ads get a lot of criticism for being a little cold. A little too bloodless to really move people in any way. And that certainly shows in the stats, with positive emotions generated by the 19 ads we tested on average 1.8% lower than the industry norm. In fact, only six of the 19 ads tested scored higher than the industry average.

Some positive emotions were higher than average, however. For example, people were 28.3% more likely to feel intense feelings of warmth after watching the Gen AI ads than they would watching the average ad in our database. Not bad for ads created by machines. But if you look closer, this average is largely pushed up by a few standout campaigns, with more than half scoring lower than the industry norm.

Meanwhile, despite the images on screen being fake, only one campaign scored lower than average for trust.

Negative emotions were also on average 14.1% below the industry norm, with only six campaigns managing to attract above-average negative emotions. Overall, feelings of confusion – an effectiveness killer – were also below average.

Attention

Overall attention was also broadly in line with industry averages. In fact, attention in the last three seconds was actually slightly higher than the norm.

Brand recall

Viewers were also easily able to correctly recall the brands involved, with brands recall 19% higher than the industry average.

Overall effectiveness

The campaigns scored well above the industry average when it comes to overall effectiveness. Using our Creative Effectiveness Scores, the ads averaged 6.24 out of 10. That means on average they scored higher than 62% of ads in our database. The highest score was 6.56 and the lowest was 5.89, which means none of the ads tested scored below the industry average of 5.8.